If we wanted to process the massive 800 million game Lichess database we would need an efficient algorithm.

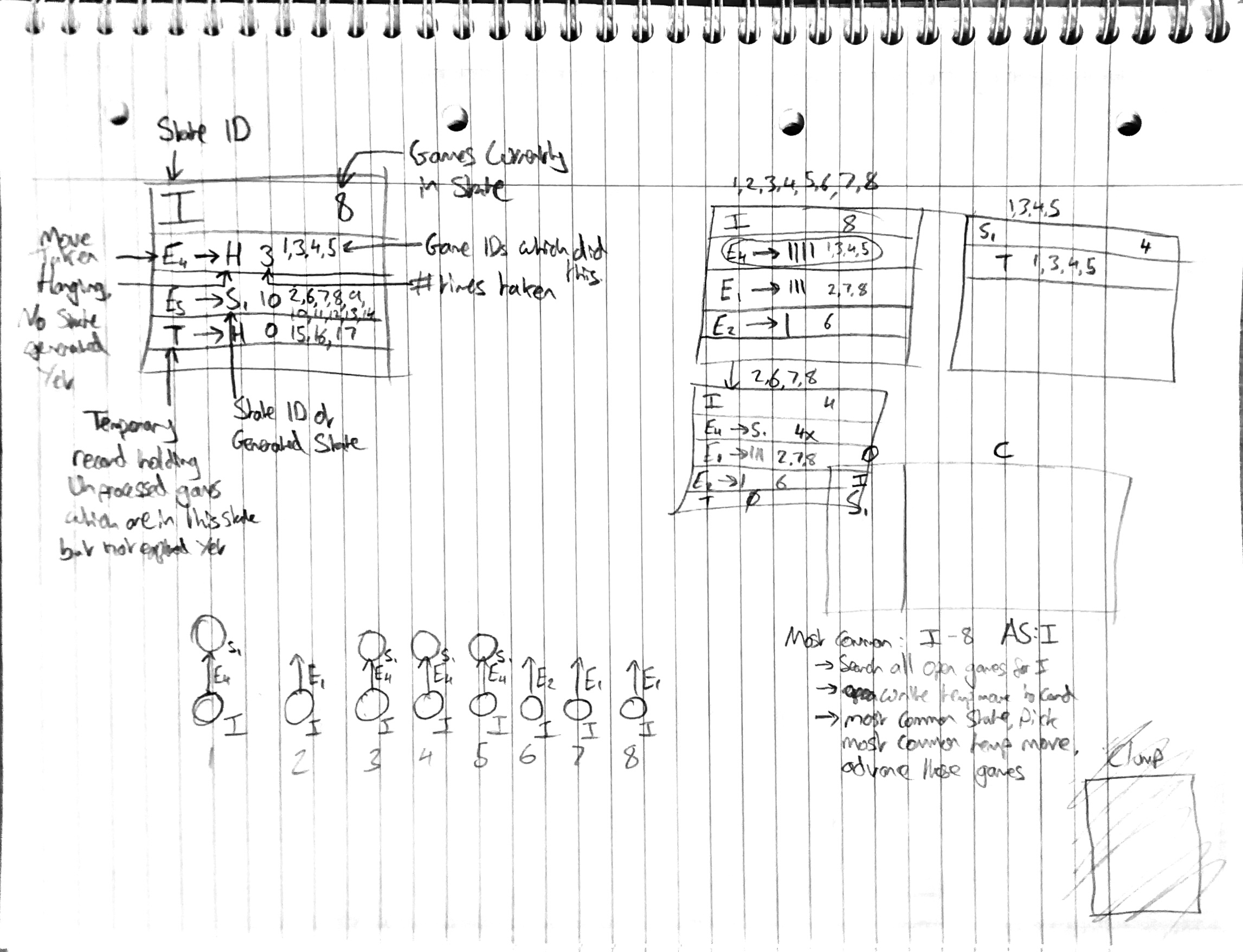

In order to reduce IO load, we batched games into groups of around 100,000 games. Within each batch the games are assigned a zobrist hash based on their current state. Each game is advanced by one move, and the new state calculated, we then group together games which have an identical state, e.g all games starting in the initial state and diverging as the game progressed. Here is a sketch of the initial plan for the algorithm:

As we advanced each state we would record the statistics we needed as state data. From the PGNs we could record win/loss rates and the Elo of the players involved for each state we processed. This produced a massive amount of data so we only processed states that had more games than a given global threshold.

Ultimately, we ended up with 60GB of data, far too much to send someone when they load a page! To let them explore openings in that kind of depth we needed some kind of server that would only send the data needed to further explore the tree, I will talk about our process for doing this in the next blog.